Part of our Health, Wellness & Life Science Series

Introduction

The goal of precision medicine is to identify possible groups of patients with atypical responses to treatment or unique healthcare needs, and to design the most appropriate intervention. Precision medicine has the potential to improve traditional symptom-driven practice of medicine, and to allow preventive interventions using predictive diagnostics.

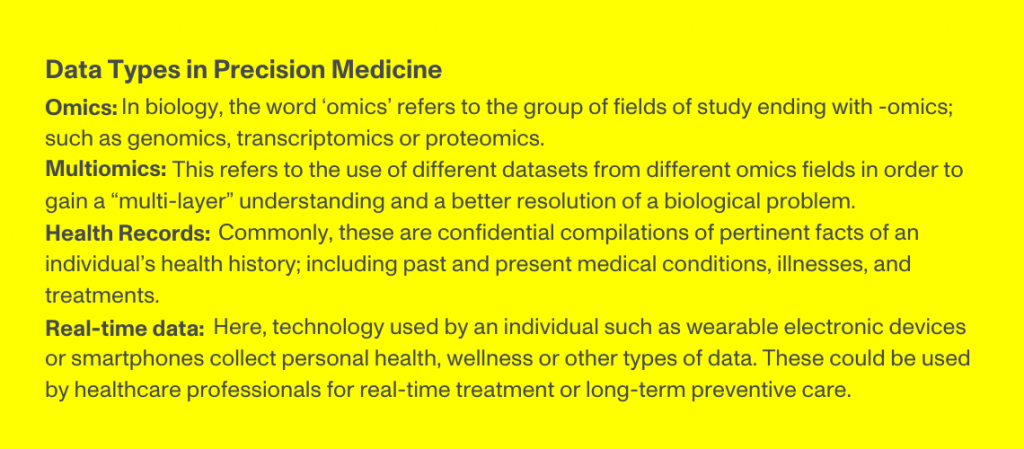

Progress in molecular technologies has led to vast amounts of human health-related ‘omics’ data that will greatly expand our understanding of human biology and health, and hopefully drive personalised medicine. Each type of omics dataset is specific to a single layer or aspect of a disease. Most diseases, however, affect complex molecular pathways where different biological layers interact with each other. Hence, there is a need for ‘multiomics’ approaches that encompass multiple layers of data and draw a more complete picture of the disease (inset).

Despite current advances, most precision medicine efforts today are manual or semi-automated, and are time-consuming. The majority are also unable to facilitate on-demand analysis of diverse human datasets to impact critical treatment windows and predict potential disease risks. Currently, there is still no platform available that can efficiently integrate medical records, multiomics, and epidemiological data acquisition, enabling effective management of data analytics with a user-friendly physician-oriented interface.

Beyond omics data, the ubiquitous use of the Internet and the widespread availability of smartphones suggest that the clinical and biological domain can be significantly enhanced with patient-generated data. These could be physical activity data, dietary intake, blood pressure or other data collected by wearable devices.

Data challenges

The use of multiomics data presents a lot of challenges. These include the presence of missing values, class imbalance, heterogeneity, the fact that patterns often involve many molecules from different types of omics data. The fact that certain datasets are often only collected from a limited number of samples is also a problem, because the number of variables in these datasets greatly exceeds the number of samples (curse of dimensionality).

In vivo, real-time data are the best types of data for the design of personalised treatments. However, using this kind of data is nearly impossible because of the lack of comprehensive datasets and the low number of samples of most published studies. To help with this problem, in vitro studies, for example with cancer cell lines or patient-derived xenograft mice models, could provide useful datasets. These datasets are rich and complex and can be used to train models of the disease.

The lack of comprehensive, good quality, accessible, updated, and correct data sources, are common occurrences in the field. To address this, research communities have been building popular public data resources over the years.

The field of oncology benefits from several popular databases such as The Cancer Genome Atlas (TCGA) or the Genomics of Drug Sensitivity in Cancer (GDSC). The latter, in particular, collects data from more than a thousand genetically characterised human cancers screened against a variety of cancer therapeutics.

Public data sources represent an extremely valuable resource. However, for building comprehensive disease models, these often do not provide the required quality and completeness. To overcome this problem, when available, one could access dedicated and curated datasets from companies that offer this type of data products for a licensing fee.

Beyond molecular data, clinical information has the potential to enrich and complement genomics and other omics data such that the combined predictors will perform better than the individual classifiers based only on a single type of data. However, personal health records are regulated by strict privacy laws and rules, which limit their use in generalised or public applications, and complicate their commercial use

Barriers in linking health information across different sources slow down healthcare research and the development of individualised care. The same problem applies to patient-generated data via for example wearable technology or smartphones. The independent and heterogeneous management of Electronic Health Records (EHRs) by health institutions poses challenges in the integration of this information. In particular, some of the challenges are: 1) the heterogeneity of the file formats and access protocols; 2) the presence of multiple schema or data structures; and 3) different or ambiguous semantics (e.g. meaning and interpretation of definitions).

Combining different types of data

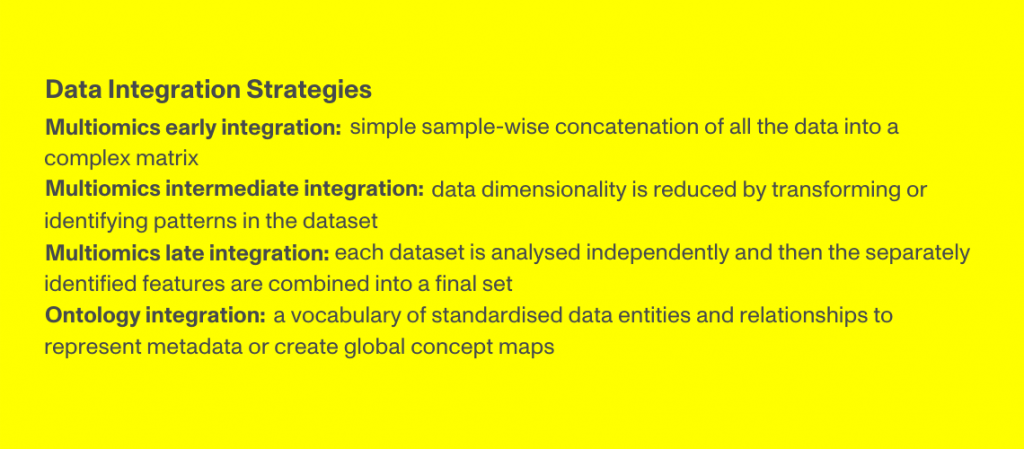

For omics datasets, integration methods can be very broadly grouped in three approaches: Early, Intermediate and Late Integration. In the first approach, datasets can be simply assembled via sample-wise concatenation into an often large data matrix. The resulting complex dataset from this methodology cannot easily be used by downstream applications, such as Machine Learning, which struggle to learn from such complex dataset (inset).

Some methods have been developed with the ability to find semi-shared structures, or patterns, shared between some omics but not all. The main advantage of such methods (Intermediate Integration) is to discover the joint inter-omics structure, that can then be used to obtain a simpler representation of the combined dataset (dimensionality reduction).

The most straightforward integration strategy, the Late Integration, is to actually apply analytical techniques such as ML models to each dataset separately, and then combine the respective best predictive features. The shortcoming of this approach is that it cannot capture inter-omics patterns, and at no point in the process ML or other analytical techniques would share knowledge or utilise the complementary information between datasets.

Utilising the methodologies above, public datasets that collect drug response data (e.g. DrugBank, ChEMBL) can be combined for example with datasets containing genetic and molecular cancer data (e.g. GSDC). These datasets can be used to build a generic ML model that can be used to predict drug responses, or identify the most appropriate drug, with data and compounds not originally used to train the model. This methodology is called Transfer Learning (for more information check our Blog article: “A Streamlit app to browse drug sensitivity predictions based on Transfer Learning”).

To regard health records and other types of data, where semantic integration is critical, one of the strategies adopted is the use of ontologies. Practically, an ontology is a standardised and controlled vocabulary for describing data elements and the relationships between the elements. Designed this way, an ontology would formally and computationally represent a dataset.

Several biomedical ontologies are already available and used in medicine. These include for instance the International Classification of Diseases (ICD) and the Systematised Nomenclature of Medicine and Clinical Terms (SNOMED CT).

Conclusion

With ever growing access to biological data, new tools for omics and other data integration strategies are continuously being proposed. Nonetheless, it is urgent that we identify the best practices, tools and strategies for the integration of vastly diverse and rich data types for applications in precision medicine. In that aspect, benchmark studies are also particularly important and should be done more frequently.

The advances of deep learning models in this area are also quite compelling. Their flexible architectures facilitate the integration of multiple omics datasets, which can also be combined with biomedical images, patient health records and other types of data, offering a better grasp of a patient’s pathology.

Precision medicine demands interdisciplinary expertise that understands and bridges multiple disciplines. The transdisciplinarity nature of this field pushes for a knowledge discovery that goes beyond the individual disciplines. In order to practically achieve this in both commercial and non-commercial settings, new paradigms for data collection and data applications are necessary.

Find more Health, Wellness & Life Science technology and digital strategy content